"If AgentCore were a car, this is the hood you lift when you really want to see how it runs."

Most blogs talk about what AgentCore is. This one explains how it thinks, scales, authenticates, and reasons. Let's get our hands oily. 🧰

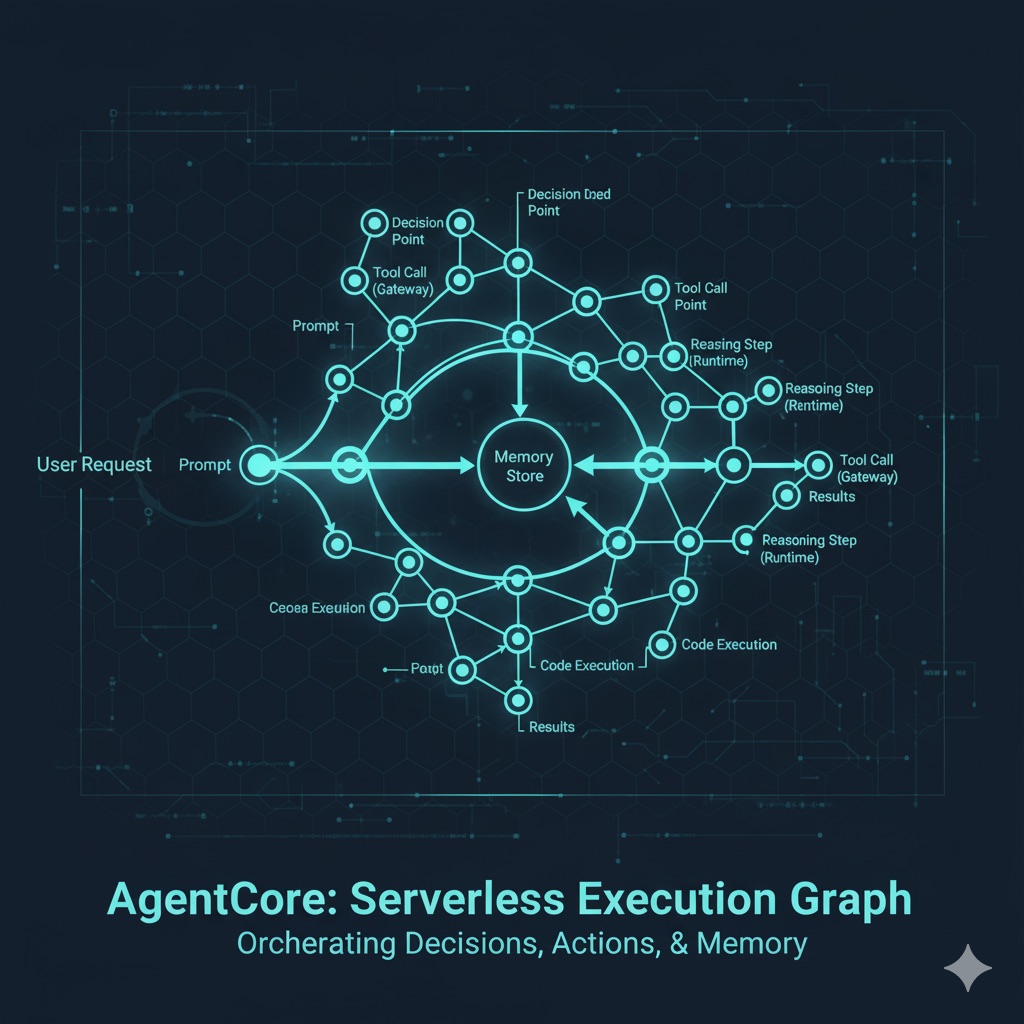

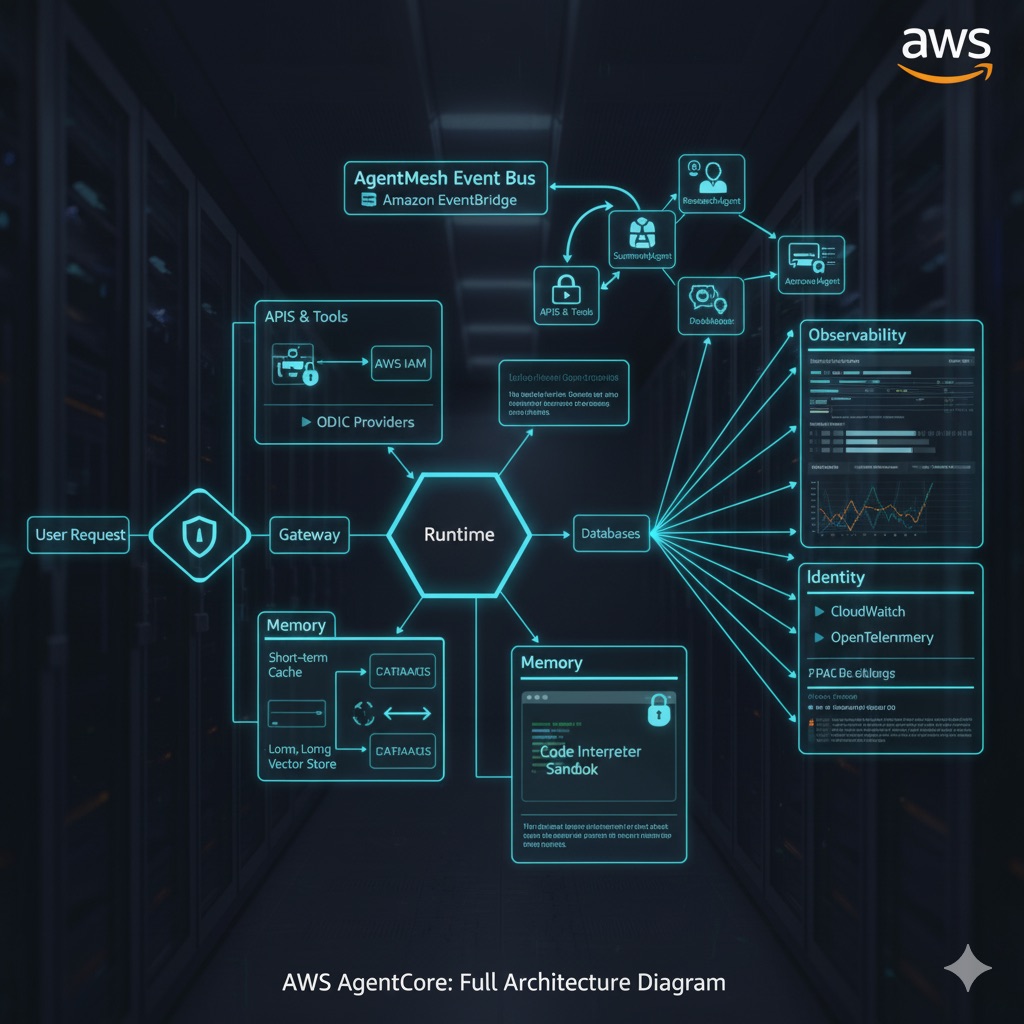

🧩 1. The Core Philosophy: Agent as a Serverless Execution Graph

❌ Traditional AI Systems

✅ AgentCore Approach

Directed Acyclic Graph (DAG) of actions, decisions, and memory states

Each node in the graph can be:

- 🔧 A tool call (via Gateway)

- 🧠 A reasoning step (via Runtime)

- 💾 A memory update

- 🧪 A code execution

- 🤖 Or even another nested agent

💡 Think of it as Kubernetes for thoughts.

Every reasoning step is isolated, auditable, and composable. If one node fails, AgentCore retries or re-routes automatically, maintaining state integrity.

⚙️ 2. The Runtime: Stateless by Design, Stateful by Memory

AgentCore Runtime uses event-driven ephemeral compute — think of it as AWS Lambda 2.0, specialized for agent reasoning.

When an agent runs:

Provision Sandbox

AgentCore provisions a lightweight sandbox

Fetch Context

The sandbox fetches relevant context from Memory

Execute

It executes reasoning steps or tool calls

Emit Events

It emits structured events (AgentInvocationEvent, ActionEvent, MemoryUpdateEvent)

Log & Vanish

Logs are piped into Observability, runtime vanishes

This means:

- ⚡ Infinite parallelism (every reasoning branch can be a separate execution)

- 🔥 No warm servers

- 📜 Complete traceability

💡 Analogy: Imagine 10,000 tiny brains that spawn, think for a second, then disappear — leaving behind only their conclusions in a shared diary.

🔗 3. Gateway: Tool and Data Abstraction Layer

Most LLM agents break when APIs change. AgentCore solves this via Gateway, which provides tool schemas (JSON-based definitions) describing:

Example: Agent Action

{

"action": "getOrderStatus",

"input": { "order_id": "1234" }

}Gateway Response

{

"status": "delivered",

"timestamp": "2025-10-12T09:33Z"

}💡 Analogy: Gateway is like the universal remote control that works for every TV, API, and tool in your house.

🧱 Pro Tip

Developers can register their own tools via:

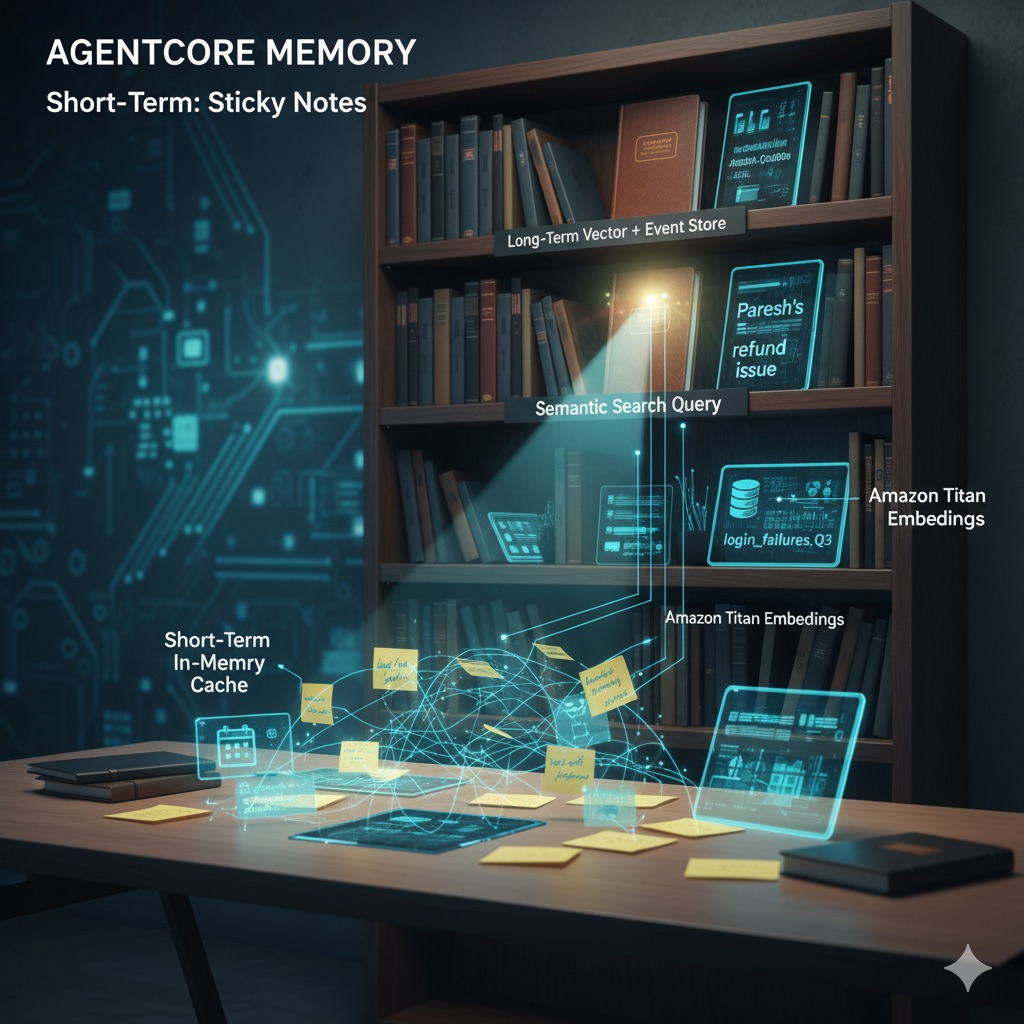

agentcore gateway register --name crmTool --schema crm_schema.json🧠 4. Memory: Event Sourcing Meets Semantic Search

| Layer | Type | Purpose |

|---|---|---|

| Short-term | In-memory cache | Context for current session |

| Long-term | Vector + event store | Persistent reasoning history |

Example Query Flow

💡 Analogy:

Short-term = sticky notes on your desk

Long-term = notebooks on your shelf

🧩 5. Code Interpreter: Secure Ephemeral Compute Layer

AgentCore's Code Interpreter lets agents execute Python, R, or JavaScript code inside a hardened sandbox using micro-VM isolation (like Firecracker, which also powers Lambda).

Why it matters:

- 🚫 No external network calls unless explicitly permitted

- 📊 All execution time and memory are tracked

- 📝 Output is serialized and logged in Observability

💡 Example: AI Ops Agent

import pandas as pd

df = pd.read_csv("metrics.csv")

df['rolling_avg'] = df['cpu'].rolling(10).mean()

df.tail()

# → Returns computed dataframe for visualization🔐 6. Identity: Auth That Thinks

Instead of static API keys, AgentCore issues Ephemeral Identity Tokens (EITs) with built-in scoping.

Each token binds to:

💡 Analogy: Every agent gets a visitor badge that automatically expires after the meeting.

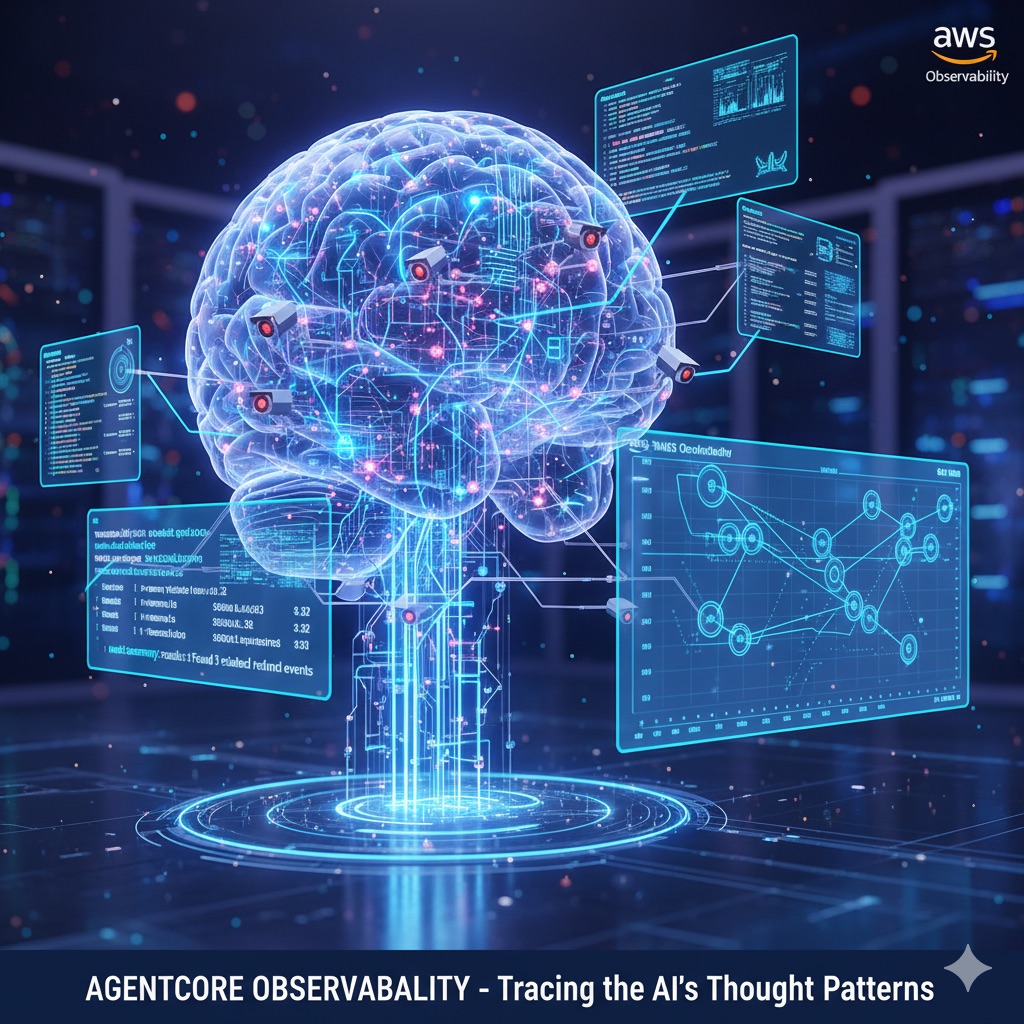

🔍 7. Observability: Tracing Thought Patterns

Observability is not just logging — it's thought tracing. Every decision, tool call, and result is captured in OpenTelemetry-compatible format.

Example Event

{

"trace_id": "a1b2c3",

"span": "memory.retrieve",

"duration_ms": 32,

"result_summary": "Found 3 related refund events"

}💡 Analogy: Observability is like a CCTV system inside your AI's brain — watching what it "thought," when, and why.

🧠 8. Deployment: From Playground to Production

| Mode | Best For | Example |

|---|---|---|

| Local Dev Mode | Testing agents interactively | agentcore run local |

| Serverless (Managed) | Production scaling with Bedrock integration | agentcore deploy --env prod |

| Hybrid Mode | On-prem compute + Bedrock APIs | Connect via VPC endpoints |

💡 Pipeline Example

⚡ 9. Scaling: Event Mesh for Agentic Collaboration

Multiple agents can coordinate via the AgentMesh event bus (built on Amazon EventBridge). This enables multi-agent collaboration:

💡 Analogy: A team of chefs in a kitchen — each has a station, but they all pass plates to complete the meal.

🏁 Why Developers Love It

Composable

Each module can be replaced or extended

Secure

IAM and EIT-based isolation by default

Serverless

Scales per millisecond

Traceable

Complete reasoning audit trail

Future-proof

Native support for Bedrock and MCP agents

🧩 Complete AgentCore Architecture

If Bedrock is the factory of LLMs, then AgentCore is the assembly line where robots (agents) come alive — each one equipped to see, think, act, remember, and collaborate.

It's the missing layer between "AI models that talk" and "AI systems that act intelligently."

🎯 Series Conclusion

We've journeyed from understanding what AgentCore is, to seeing how it thinks, exploring real-world applications, understanding its pricing model, and finally diving deep into its technical architecture.

Key Takeaways:

- 🧠 AgentCore is the operating system for AI agents

- ⚡ It uses serverless, event-driven architecture for infinite scale

- 🔒 Security and identity are built-in, not bolted-on

- 💰 Pay-per-use pricing makes it accessible to everyone

- 🌍 Real-world applications are already transforming industries

AgentCore represents a fundamental shift in how we build AI systems — from static models to dynamic, reasoning agents that can truly understand, act, and learn in our world.